AWS Glue

- Fully managed ETL service.

- AWS glue is a cloud optimized extract, transform and load service (ETL).

- Consists of a central meta-data repository

- Glue data catalog.

- A spark ETL engine.

- Flexible scheduler.

- It allows you to organize, locate, move and transform all your data sets, across your business, so you can put them to use.

- Glue is serverless.

- Point glue to all you are ETL jobs and hit run.

- We don’t need to provision, configure, spin up servers and manage their life cycle.

- AWS glue offers a fully managed server-less ETL tool. This removes the overhead, and barriers to entry, when there is a requirement for a ETL service in AWS.

- Requirements

- S3 bucket

- Data folder and sub folders.

- Upload data

- Script location

- Temp directory

- IAM role

- Glue provides crawlers with automatic schema inference for your, semi structured and structured data sets.

- Crawlers automatically discover all your data sets, discover your file types, extract the schema and store all this information in a centralized metadata catalog for later queries and analysis.

- Glue automatically generates the Scripts that you need to extract, transform, and load your data from source to target so we don’t have to start from scratch.

- Set up

- Create a new bucket.

- Create folder data.

- Create folder, temp directory for temporary files.

- Create folder Scripts.

- Inside data, create folder, customer_database.

- In customer_database folder, create customer_CSV.

- In customer_CSV create folder, data load_{current time stamp}.

- Next, we need to have a CSV file to load data from.

- Upload CSV in S3 under data.

- Go to IAM

- Create a new rule for glue to access S3 bucket.

- AWS glue data catalog

- Persistent meta-data store.

- Data location

- Schema

- Data Types

- Data classification.

- It is a Managed service that lets you store, annotate, and share meta-data which can be used to query and transform data.

- one AWS glue data catalog per AWS region.

- Identity and access management IAM. policies, control access.

- Can be used for data governance.

- A glue data catalog has following components

- AWS glue Database.

- A set of associated data catalog table, definitions, organized into logical group.

- Create a new database.

- Give it a name.

- Give S3 URN of folder where you want to store database.

- Give a description.

- AWS glue Tables

- The meta-data definition that represents your data.

- The data resides in its original store.

- This is just a representation of the schema.

- we can add tables using crawler, manually, from external schema.

- Add table manually

- Enter table name.

- Select database.

- Select source type (S3)

- Select S3 path

- Select classification as CSV.

- Select Delimiter.

- Add columns from source to be mapped .

- Add tables using Crawler.

- Give crawler a name.

- Crawler source type.

- Repeat crawls of S3 data stores.

- Choose data Store.

- Choose path

- Add another data store.

- Choose IAM role to access S3 for glue.

- Set frequency.

- Configure output

- Database

- Table prefix

- Select and run caller on finish we see table name is same as S3 folder name under tables.

- Click on table and we will see the table data.

- AWS glue Connections

- A data catalogue object that contains the properties that are required to connect to a particular data store.

- Adding connection.

- Give name.

- Select connection type like JDBC etc.

- Select database engine.

- Select instance.

- Select dB name.

- Username

- Password

- AWS glue Crawlers

- A program that connects to a data store (source or target), progresses through a prioritized list of classifiers to determine the schema of your data, and then create metadata tables in the SWS glue data catalog.

- Schema Registers.

- EWS glue ETL has following components.

- Blueprints

- Workflows

- AWS glue Jobs

- The business logic that is required to perform ETL work. It is composed of a transformation, script, data, sources, and data targets. Job runs are initiated by triggers that can be scheduled or triggered by events.

- Adding a job

- Click add job

- Give it a name.

- Give it and IAM, role.

- Choose S3 path of Scripts .

- Give path to temp directory.

- Choose data source.

- Choose transformation type.

- Choose data target.

- Choose data, target directory.

- You will see schema mapping from source to target on next screen.

- Save job and edit script.

- Save script and run job.

- We will have our parquet file in S3.

- We can view this file in Athena.

- AWS glue Triggers.

- Initiates an ETL job.Triggers can be defined based on a scheduled time or an event.

- Adding a trigger

- Give name

- Give Trigger type

- Give frequency

- Start hour, minute.

- Select job

- Finish and activate trigger.

- AWS glue Dev endpoints.

- A Development end point is an environment that you can use to develop and test your AWS glue scripts.

- It’s essentially an abstracted cluster.

- NB the cost can add up.

- AWS glue studio.

- AWS glue security has following components

- Security configuration.

- We can query the data using AWS Athena.

- Create a folder for Athena results in S3.

- Go to settings and select query result location Folder, path in bucket.

- Select data source.

- Select database.

- Select table and click preview table we will get results.

- Partitions in AWS

- Folders where data is stored on S3, which are physical entities, are mapped to partition, which are logical entities i.e. columns in the glue table.

- Example

- We have some logs stored in S3 and we have user data in our RDS.

- We need to summarize and move all this data to our red shift data warehouse so that we can analyze and understand which demographics are actually contributing to our adoption.

- We can point crawlers to our S3 and RDS they will infer datasets and data-structures inside file and store info in a catalog.

- We can then point glue to tables in catalog and it will automatically generate Scripts that are needed to extract and transform data into red shift data warehouse.

Build ETL Pipeline using AWS glue and step functions

- Typical ETL, glue pipeline

- We can have data in JDBC data source.

- AWS glue job is used to ETL data in S3

- S3 is raw bucket area where we have data that is immutable and we don’t do any business transformation on this data just load from source.

- We use AWS crawler to catalog our data so Data is discoverable and source-able.

- Once our data is in S3 raw bucket, we use glue job to convert our data into cleansed data.

- Cleansing operation depends on business needs it can be standardizing column names.

- Standardizing currency format, date format, or it could be removing duplicate data.

- Once we have cleansed the data we can use glue crawler again to catalog the data.

- Then we again run glue job to convert cleansed data into curated data.

- Curated data is created based on use case only like creating reports, dashboards, machine learning.

- Again we use glue crawler to catalog our data.

- Methods of building pipelines

- AWS event based framework.

- AWS. Lambda

- AWS. Cloud watch.

- Amazon DB

- Amazon Event page.

- AWS, glue workflow.

- AWS step functions.

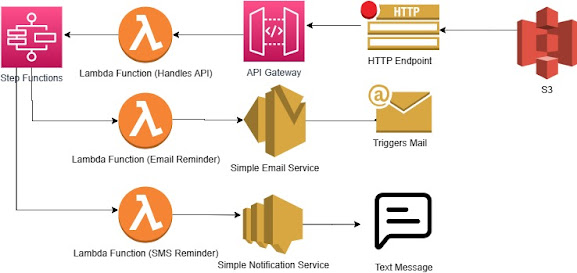

- Pipeline workflow, using Step functions.

- Step function based pipeline benefits

- Reusability in Jobs and crawlers.

- Observability.

- Built in and out of box visibility.

- Pipelines step level tracking.

- Development effort

- Early wire.

- Consistent Development effort to throughout.

- Integration with AWS services.

- Integration possible

- Direct AWS services indication in step function workflow.

- Development environment.

- Step functions, workflow, designer.

- Starting a glue job

- Job parameters

- Job name

- Job metadata

- Synchronous or asynchronous Run

- Next state

- Starting a glue crawler.

- There is no out of box task for glue crawler.

- Crawler execution is a long running process.

- Approach 1

- Use task to invoke a crawler API.

- Event-based execution control using “wait for task token”

- Approach 2

- Leverage lambda task with custom code.

- You need two lambda functions

- Start crawler.

- Get status of crawler.

- The crawler goes in starting state from ready state.

- From starting running.

- From running to stopping.

- From stopping to ready again.

- Once the crawler status turns back to ready, we move to next state.

- We can invoke a lambda function from step function and crawler.

- Inside lambda, we code as follows

- Using boto3.client(‘glue’)

- Using start_crawler method

- For getting status of crawler , we can use lambda function, which gets crawler name as input from step function.

- get_crawler(Name=target)

- return response [“crawler”] [“state”]

- We are using python in lambda function.

- Practical

- Create folders in S3 to ingest and clean data with scripts as follows

- Cleansed

- Raw

- Scripts.

- Create tables in catalog depending on source.

- Create two crawlers to catalog data and cleansed data.

- Create two jobs, one to ingest data from RDS (RAW data) and second to cleanse data.

- Create 2 lambda functions

- Start crawler function.

- Get crawler function

- Create state machine.

Comments

Post a Comment